-

Continue reading →: ‘pac code push’ | Cannot read properties of undefined (reading ‘httpClient’)

Recently I was working on a Power Apps Code App project and tried pushing my changes to Dataverse using the Power Platform CLI. But instead of pushing the app, I got the following error. Reason and Fix: Steps: 🙂

-

Continue reading →: How to Connect Dataverse MCP Server to Claude Code

In this article, let’s explore how to connect the Dataverse MCP server to Claude Code. Once configured, Claude Code can interact directly with your Dataverse environment using MCP tools. This allows Claude to retrieve schema information, query tables, and assist with Dataverse development tasks. Prerequisites Before using the Dataverse MCP…

-

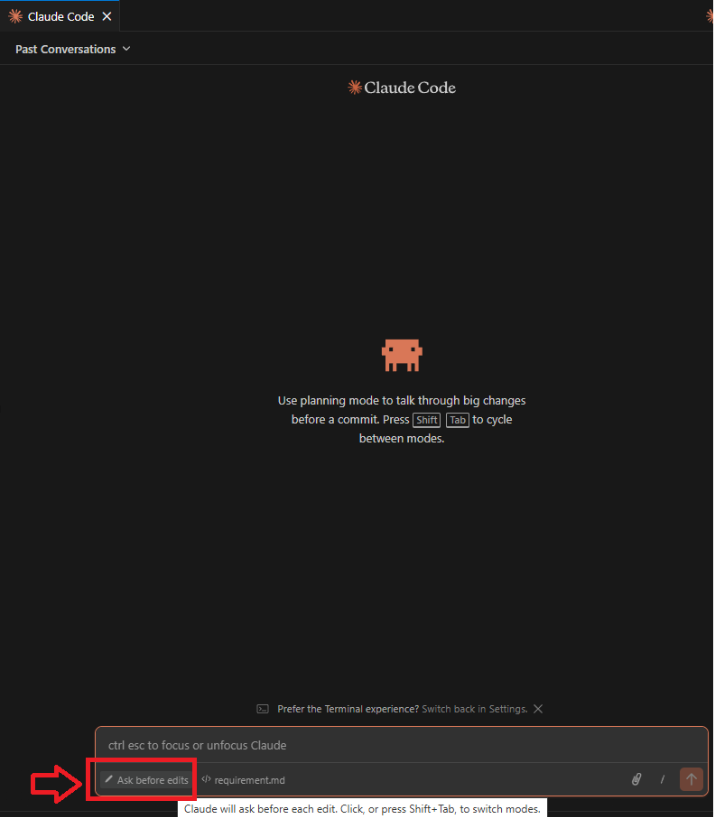

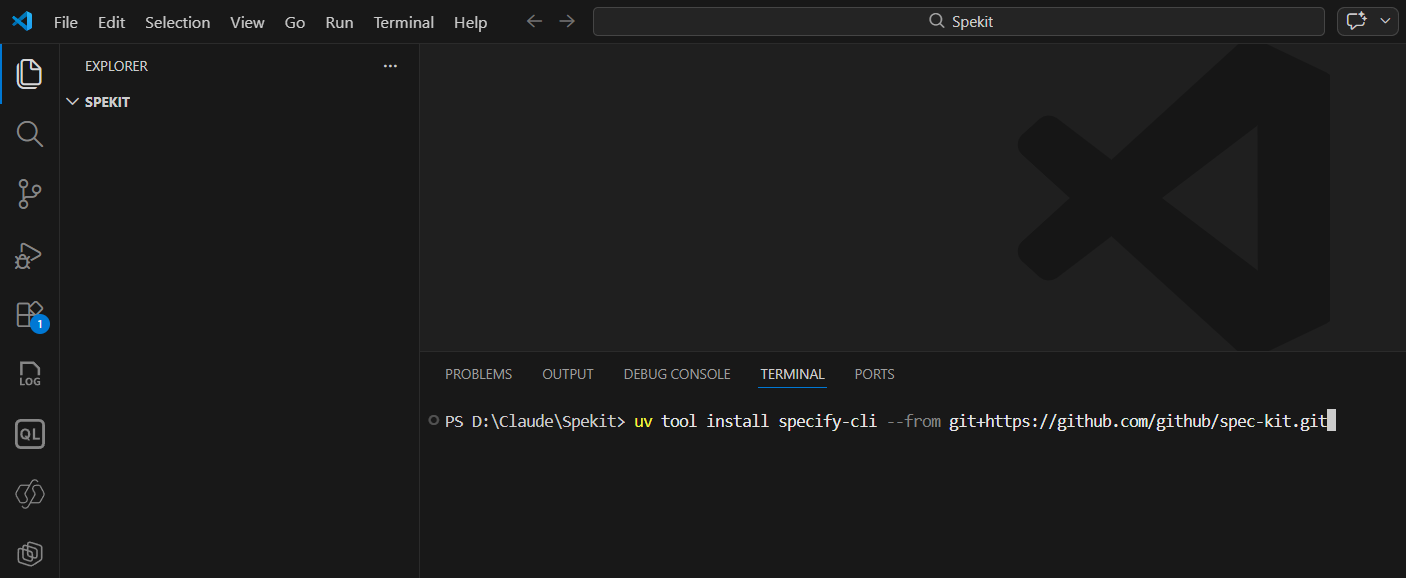

Continue reading →: GitHub Spec-Kit with Claude Code: Build a React App Using Spec-Driven AI

I recently tried GitHub Spec-Kit to understand how Spec-Driven Development with AI agents works. Instead of simply prompting an AI to “build an app,” Spec-Kit introduces structure: Define principles → Define requirements → Create a plan → Break into tasks → Implement. To understand this properly, I built a super…

-

Continue reading →: Fix “running scripts is disabled” error while running ‘npm’

If you recently installed Node.js on your Windows machine and tried running the npm command, you might have encountered an error like this: This issue happens because of a PowerShell security restriction. By default, PowerShell blocks script execution for security reasons. How to fix it? To fix this, we need…

-

Continue reading →: Claude Code | Organize Your Project Using CLAUDE.md

In my previous blog post Create a ‘To-Do List’ App in VS Code using Claude Code ,I explained how to build a simple app from scratch. The app works, but I noticed a few areas for improvement: In this article, let’s see how CLAUDE.md helps with all of the above.…

-

Continue reading →: Getting Started with Claude Code | Create a ‘To-Do List’ App in VS Code

In this blog post, I will explain how you can get started with Claude Code and build your first simple application. Let’s start with the basics and prerequisites. What is Claude Code: Create a Claude account: Install Claude Code: To install Claude Code on all platforms, refer to the official…

-

Continue reading →: Copilot Studio: ‘Component Collections’ in action

In my previous article Build an Agent Using Microsoft Planner, I built an agent that connects to Microsoft Planner and create tasks. To achieve that functionality, I configured the required following Tools directly inside the agent : Now, let’s consider a new requirement. Suppose you need to build another agent…

-

Continue reading →: Copilot Studio: Build an Agent Using Microsoft Planner

In my previous blog post, I explained how to build Microsoft Copilot Studio agents using Knowledge and Topics (Agent Flows) using a Running Registration Agent example. In this post, we’ll build an agent that can collect details from the user, generate a Run training plan, and turn that plan into…

-

Continue reading →: Agent Platform Advisor: Choosing the Right Microsoft Copilot Made Easy

Microsoft now offers many Copilot options — Copilot Chat, Microsoft 365 Copilot, Microsoft Agents, Copilot Studio, Foundry, and even 3rd-party agents. The ecosystem is powerful, but for most people, it creates confusion. People often struggle with questions like: Agent Platform Advisor: This is where the Agent Platform Advisor from the…

Visitors

2,133,848 hits