In this article, let’s learn how to load data from an Excel sheet into multiple Dataverse tables using Dataflows.

Lets first understand what dataflows are.

What are dataflows?

- Dataflows are a self-service, cloud-based, data preparation technology.

- Following diagram shows an overall view of how a dataflow is defined.

- A dataflow gets data from different data sources.

- Then, based on the transformations configured with the Power Query authoring experience, the dataflow transforms the data by using the dataflow engine.

- Finally, the data is loaded to the output destination, which can be a Power Platform environment, a Power BI workspace, or the organization’s Azure Data Lake Storage account.

Now that we know the basics, let’s learn about dataflows using the following scenario.

Scenario:

- Create two Dataverse tables named ‘Company’ and ‘Employee’ and establish a relationship between them.

- Prepare data for both ‘Company’ and ‘Employee’ tables in Excel sheet.

- Configure Dataflow and load excel data to Dataverse.

Let’s begin the execution of scenarios.

Create Dataverse tables:

- From the power platform maker portal, create or open a Dataverse solution.

- Create ‘Company’ and ‘Employee’ Dataverse tables and establish a One to Many relationship between ‘Company’ and ‘Employee’.

- As an optional step, you may also create a Model-Driven App as outlined below to access the data.

Now that we have the destination Dataverse tables ready, let’s prepare the source data (i.e., Excel sheet).

Prepare Data:

- Create an Excel sheet and add a tab ‘Company,’ then populate the data as shown below.

- Add another tab ‘Employee’ and populate the data as indicated below.

- Save the Excel sheet. You can either upload this Excel file to OneDrive or save it locally.

We now have both destination Dataverse tables and source data (i.e., Excel sheet). Let’s begin configuring the Dataflow.

Configure Dataflow:

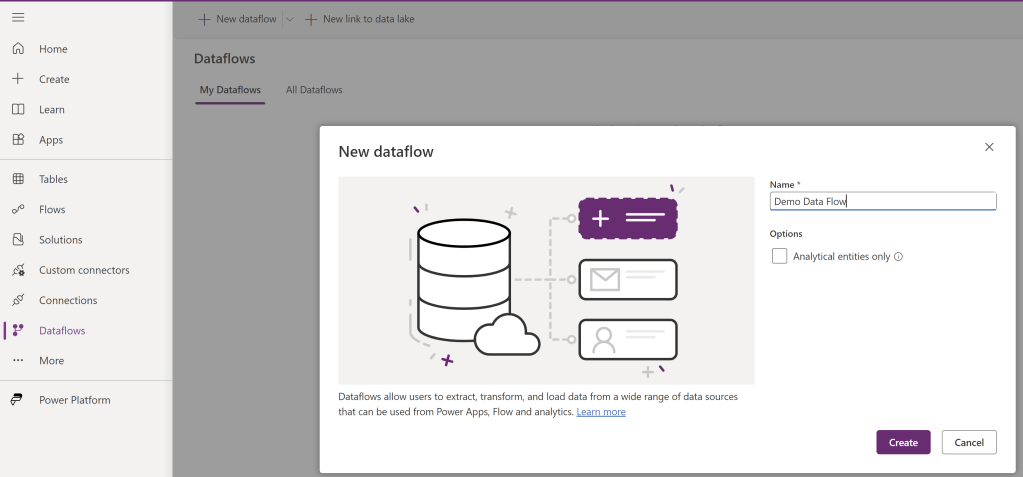

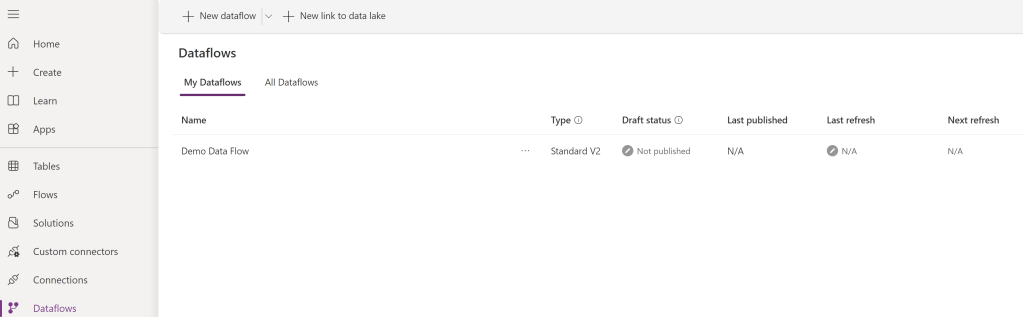

- From the Power Apps maker portal, Click on Dataflows > New dataflow.

- Enter a ‘Name’ and click on ‘Create’.

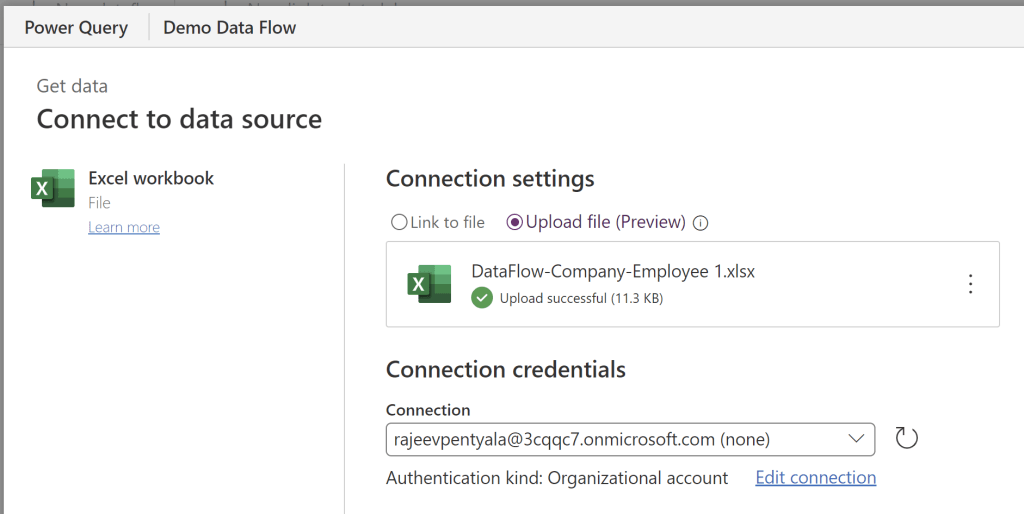

- In our example, as we are uploading data from an Excel sheet, click on ‘Excel workbook’ in the ‘Choose data source’ pane.

- I have selected the ‘Upload file (Preview)’ option, and I browsed the Excel file from my machine. Alternatively, you can also upload the file from OneDrive if you prefer.

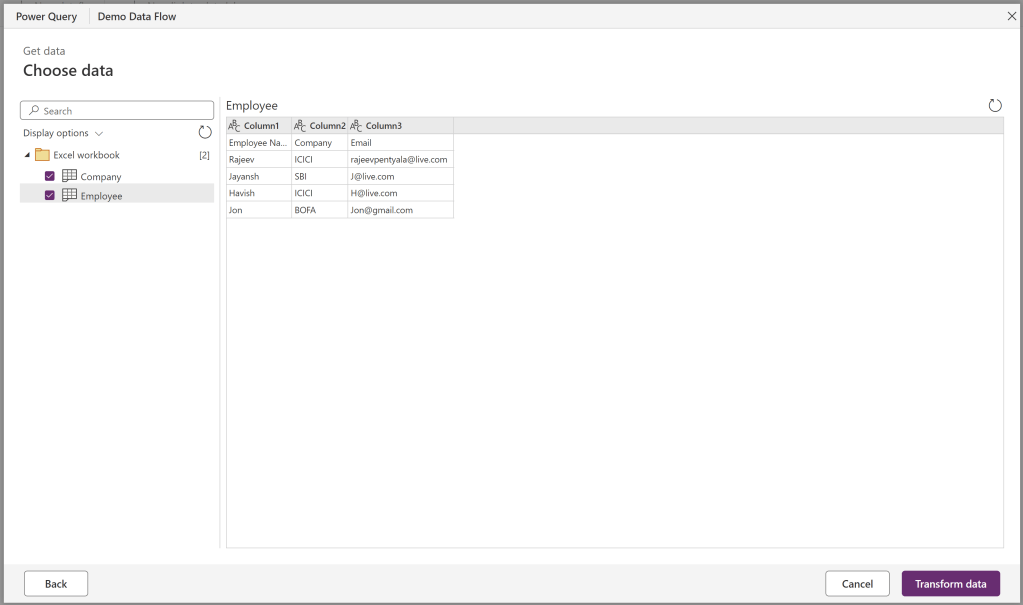

- Click ‘Next,’ and in the next pane, select both ‘Company’ and ‘Employee,’ then click on ‘Transform data‘.

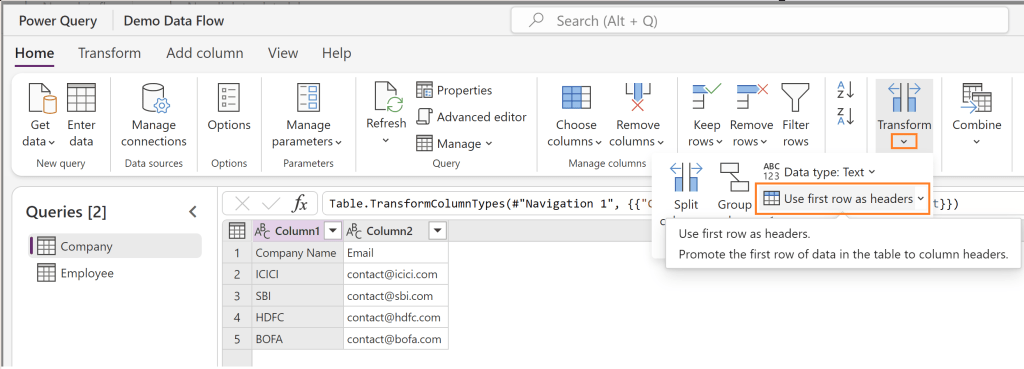

- In the next pane, you can perform data transformations. For example, if you want the first row of the tables to be treated as headers, select both tables and click on ‘Use first row as headers‘.

- Repeat the above step for ‘Employee’ table as well.

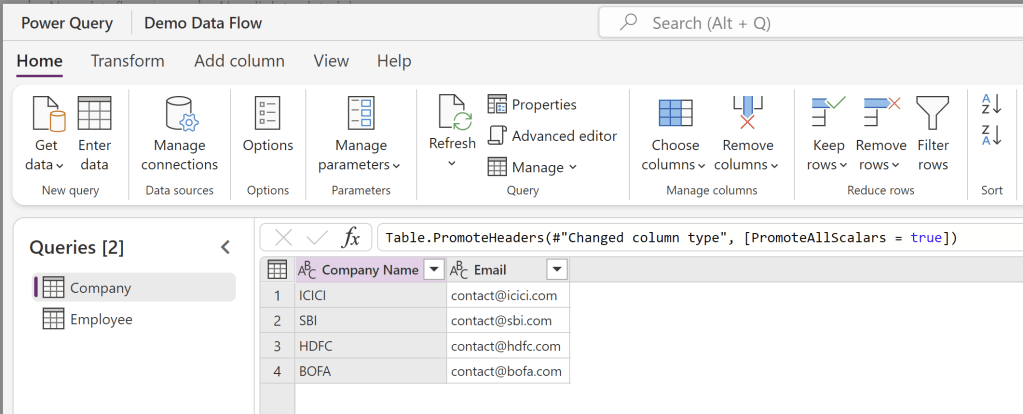

- After clicking on ‘Use first row as headers,’ the table appears as follows.

- An important step is next, as we will be loading data for both ‘Company’ and ‘Employee’, in a single dataflow and since both tables are related , the order is crucial. Ensure that the ‘Company’ table is positioned before the ‘Employee’ table. Use the drag feature to adjust the table order.

- In my example, as the ‘Company’ table is already positioned before the ‘Employee’ table, there is no need for reordering.

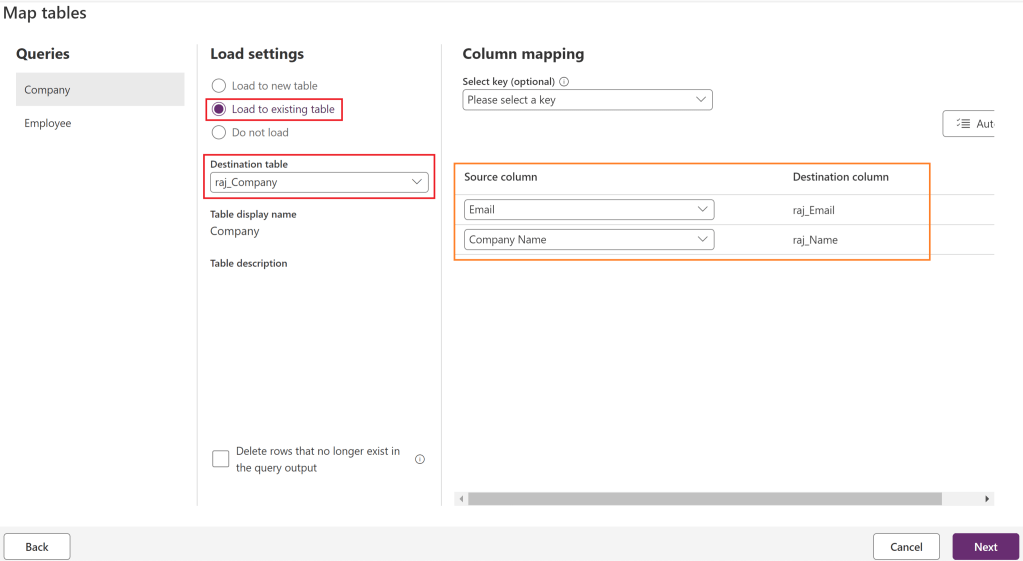

- Click on ‘Next,’ which will take us to the ‘Map tables’ section where we map the source (i.e., ‘Excel’) columns with the destination (i.e., Dataverse table) columns..

- Select the ‘Company’ table and,

- Load settings > Load to existing table

- Destination table > ‘raj_Company’ (i.e., schema name of the Dataverse table)

- Choose columns under ‘Source column’ and ‘Destination column’ section as below.

- We have completed the mappings for the ‘Company’ table, which is straightforward.

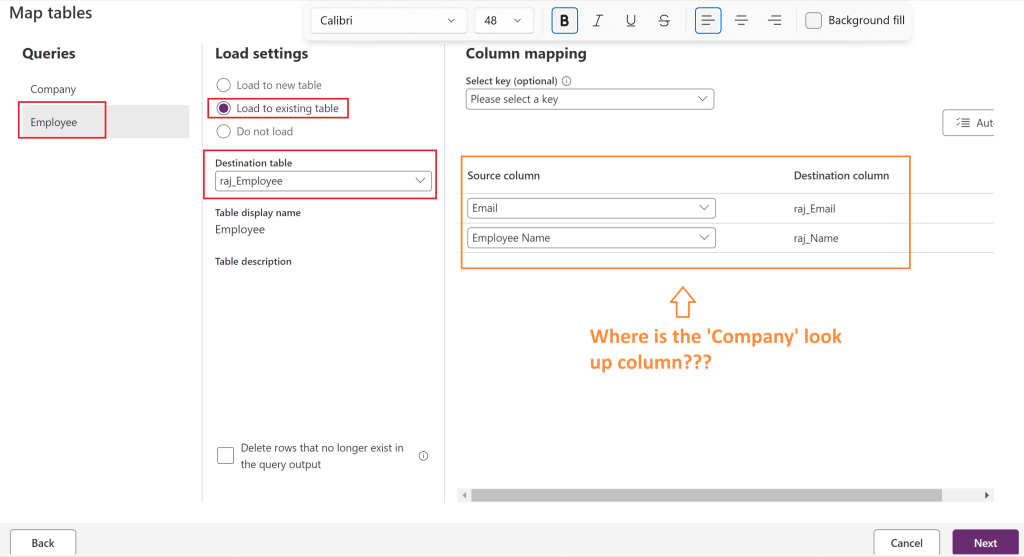

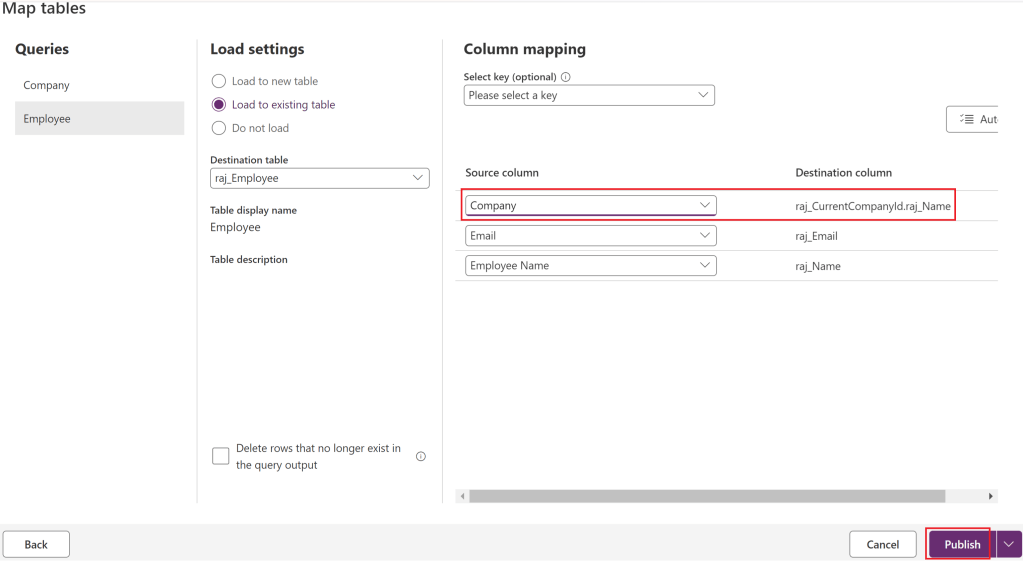

- Let’s start the mapping of the ‘Employee’ table, which is a bit tricky.

- Select ‘Employee’ table and,

- Load settings > Load to existing table

- Destination table > ‘raj_Employee’ (i.e., schema name of the Dataverse table)

- Choose columns under ‘Source column’ and ‘Destination column’ section as below.

- However, where is the ‘Company’ lookup column under the ‘Source column’ and ‘Destination column’ sections? This is the tricky part; to map lookup fields in Dataflow, we need to create Alternate Keys.

- We need to perform an additional prerequisite step of creating an alternate key on the ‘Company’ table.

- I could have explained this prerequisite step earlier, but I wanted you to understand the relevance of this step, hence the backtrack. Now that you are aware, please create the alternate key before you start the Dataflow configuration next time.

- Click on ‘Cancel,’ which will save the Dataflow in the ‘Not published’ state. We will resume our configuration once we have created the alternate key.

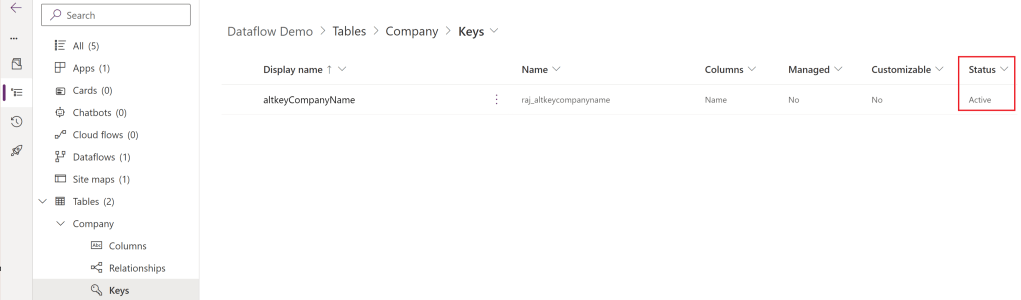

Create alternate key on parent table (i.e., Company):

- Select the ‘Company’ table, Click on New > Key.

- Select the ‘Name’ under the ‘Columns’ and Save.

- As we have chosen ‘Name’ as the alternate key, we cannot have duplicate records with the same Company ‘Name’.

- Alternate keys take time to get activated. Ensure that the ‘Status’ has turned to ‘Active’.

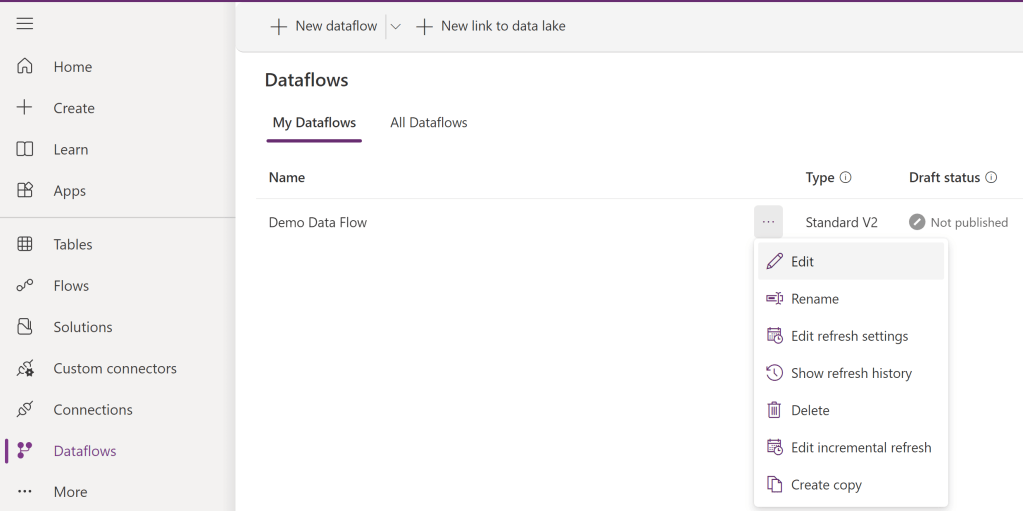

Resume the Dataflow configuration:

- Now that we have the key, let’s resume the Dataflow configuration. Select the Dataflow and click on ‘Edit’.

- You will need to redo the mapping step for both the ‘Company’ and ‘Employee’ tables.

- “Now that we have created an alternate key on the ‘Company’ table, we will be able to map the ‘Company’ lookup column.

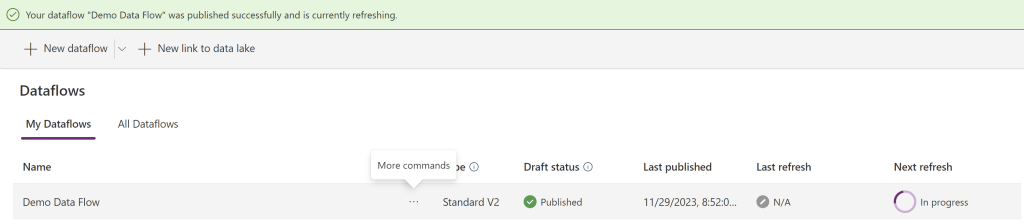

- Click on ‘Publish.’ The Dataflow will be published, and the data refresh will start (i.e., loading Excel data to Dataverse). Please observe the ‘In Progress’ status in ‘Next Refresh.’

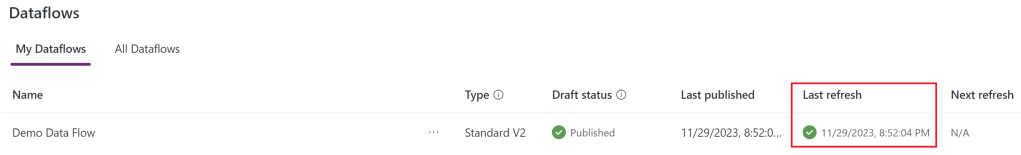

- Depending on your data size, the refresh may take some time. After a successful refresh, you will notice the ‘Last Refresh’ populated with a timestamp.

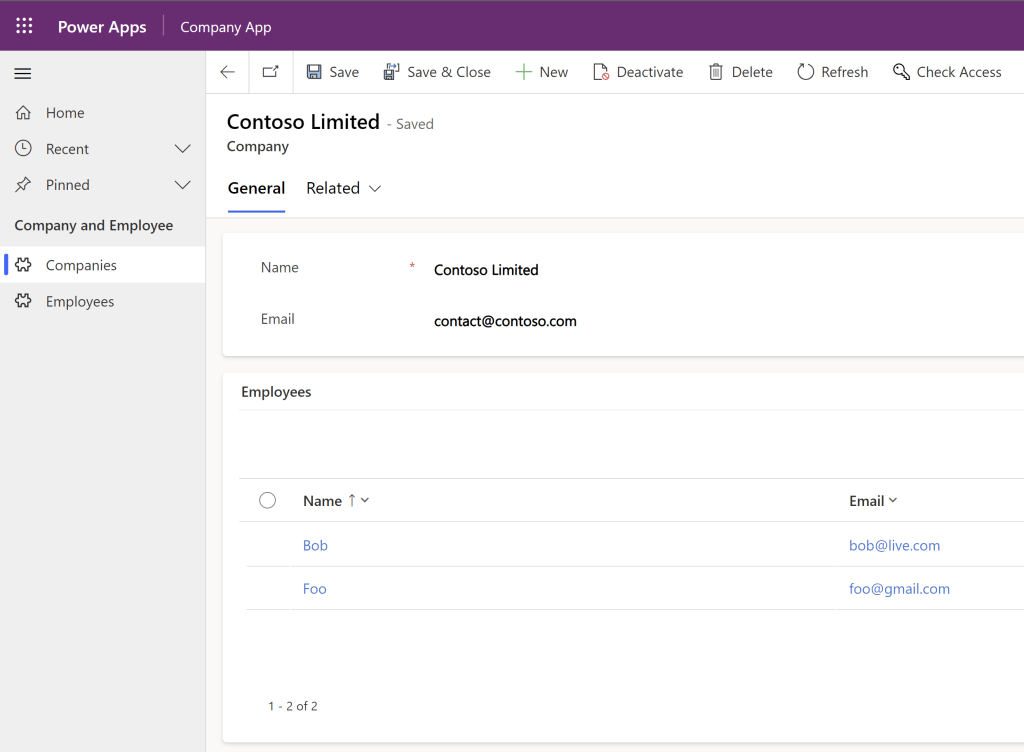

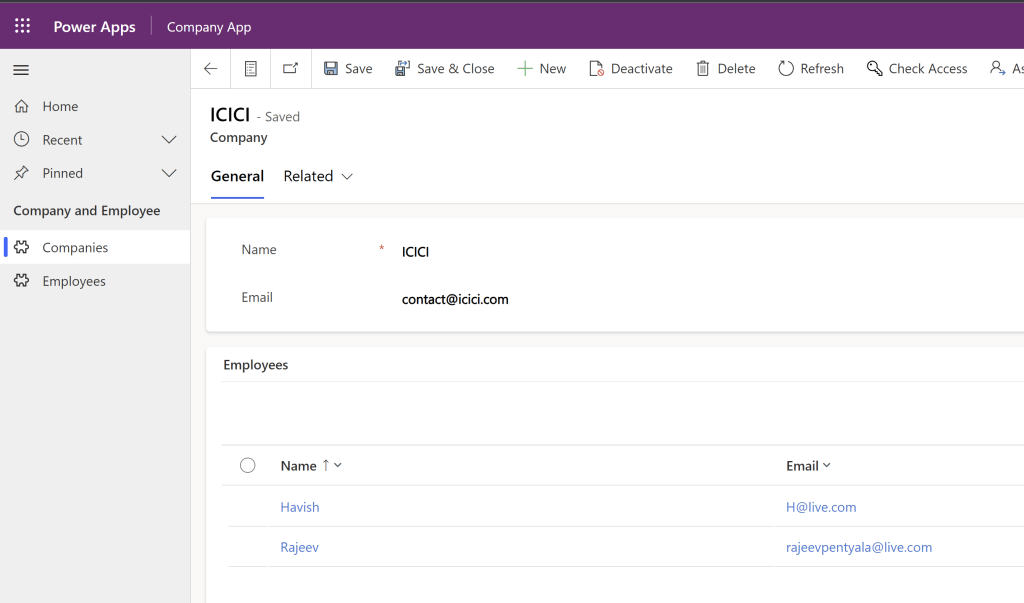

- Navigate to your Model-Driven App and refresh. You should observe that the data has been imported from excel file to both ‘Company’ and ‘Employee’ tables.

- Open one of the companies, and you should observe the ‘Employees’ listed under it.

- We have covered the basics of porting data from Excel to Dataverse using Dataflows.

Add the Dataflow to Dataverse solution:

- We can add an existing Dataflow to a solution which makes it as solution-aware dataflows. You can add multiple dataflows to a single solution.

- The data loaded by dataflows to their destination isn’t portable as part of solutions, only the dataflow definitions are.

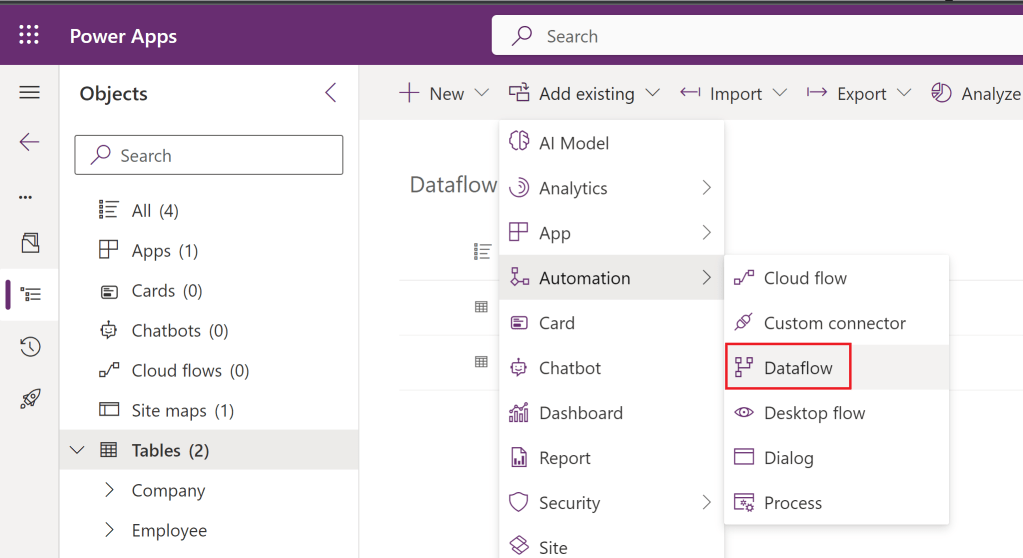

Lets see how to add Dataflow to a solution.

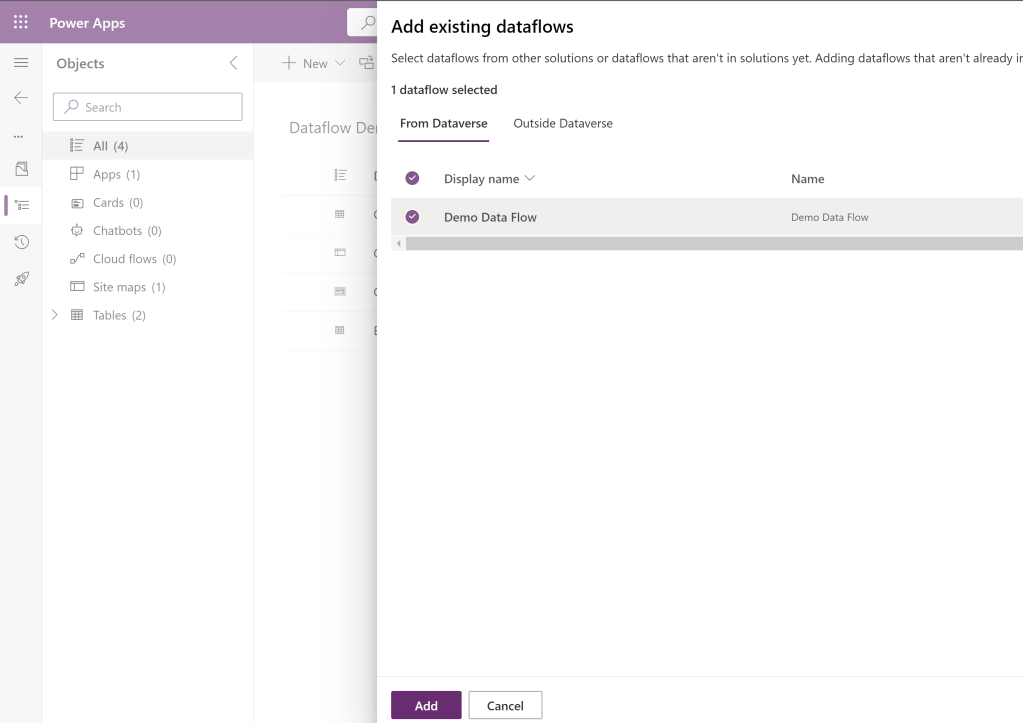

- Open the solution, Select Add Exiting > Automation > Dataflow

- Select the Dataflow and click ‘Add.’ With that, the Dataflow is now part of the solution and can be transported to other environments.

- Exporting and importing solutions containing dataflows is identical to doing the same operations for other artifacts.

🙂

![[Step by Step] Beginner : Create a PCF control and add it to a custom page](https://rajeevpentyala.com/wp-content/uploads/2024/12/image-49.png)

Leave a comment